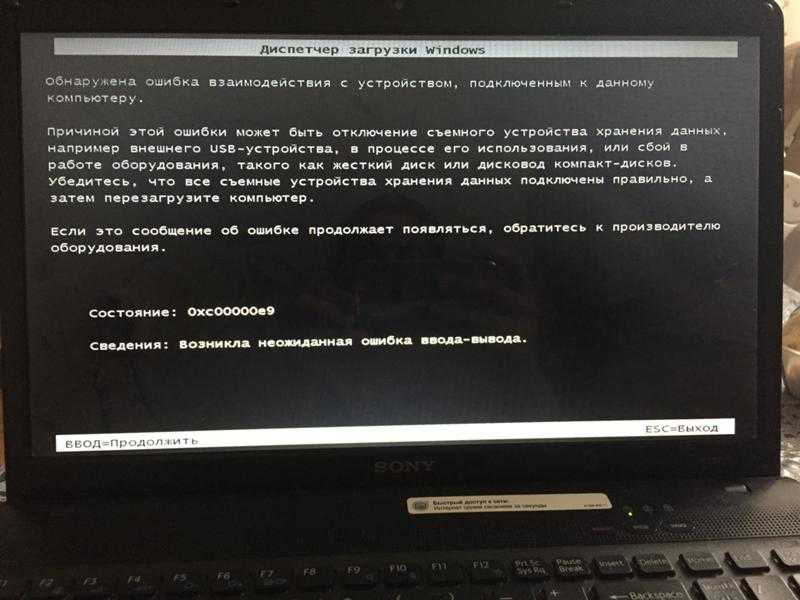

Sector size (logical/physical): 512B/512B Mount: /mnt: special device /dev/sdc1 does not sudo parted -lĮrror: Invalid argument during seek for read on /dev/sdcĮrror: The backup GPT table is corrupt, but the primary appears OK, so that will be used. I/O size (minimum/optimal): 512 bytes / 512 bytesĭispositivo Boot Start End Sectors Size Id Tipo Sector size (logical/physical): 512 bytes / 512 bytes sd 6:0:0:0: Write cache: disabled, read cache: enabled, doesn't support DPO or FUA sd 6:0:0:0: Attached scsi generic sg2 type 0 scsi 6:0:0:0: Direct-Access WDC WD30 EFRX-68EUZN0 PQ: 0 ANSI: 2 CCS usb-storage 3-1:1.0: USB Mass Storage device detected usb 3-1: Product: USB to ATA/ATAPI Bridge usb 3-1: New USB device strings: Mfr=1, Product=2, SerialNumber=5 usb 3-1: New USB device found, idVendor=059b, idProduct=0475, bcdDevice= 0.00 usb 3-1: new high-speed USB device number 3 using xhci_hcd This is the relevant information I managed to extract: sudo dmesg I expected to be able to mount the partition easily simply plugin one of the disks on my laptop (Kubuntu), but that was when I discovered that things were more complex than that. I'm on my way to solve that problem, but I would like to recover my data before attempting to plug my disks again in the NAS (QNAP HelpDesk has been basically useless). Some weeks ago, during a firmware update, the NAS stopped booting.

I'm quite acquainted with Linux and CLI tools, but never used mdadm or LVM so, despite knowing (now) that QNAP uses them, I have no expertise, or even knowledge about how QNAP manages to create the RAID1 assemble. dev/mapper/cachedev2 /share/CACHEDEV2_DATA ext4 rw,usrjquota=aquota.I have a QNAP HS-251+ NAS with two 3TB WD Red NAS Hard Drives in RAID1 configuration. I have 7 /dev/mapper/cachedev# mount points and problem is with 3 of them. đ1:09:37 Systemđ27.0.0.1 Storage & Snapshots Volume Failed to check file system of volume "Vol_Qsync". đ0:48:02 Systemđ27.0.0.1 Storage & Snapshots Volume File system not clean. I have a problem with unhealthy state of file system :

0 kommentar(er)

0 kommentar(er)